Thoughts about 3D Technology

I have been talking about the various merits and faults of 3D with my colleagues going back to a demonstration I saw in April of 2011 at the NAB convention in Las Vegas. I thought I would try and elucidate some of these thoughts and see if anyone had interest in replying.

I would like to start off with describing a simple experiment that illustrates the basic idea behind modern 3D video:

With both eyes open, point your finger at the place where the ceiling meets 2 walls. Now close your right eye. If your right eye is dominant, your finger will not appear to move, or will only move a small bit. Open both eyes and point again. Now close your left eye. Your finger will have appeared to move quite a bit. Now you will know if you are left eye dominant or right eye dominant. Note that this does not necessarily correlate to your dominant hand.

What is important for this discussion is the difference in focus between your two eyes.

This is the mechanism that enables one’s depth perception. This is what a modern 3D camera seeks to emulate. These cameras use two lenses which allow a three-dimensional signal to be recorded by determining the differences between the two images which is analogous to how your brain decodes your own three-dimensional vision. But these images are displayed on two-dimensional screens. In order to give depth to the image, glasses are used.

What is interesting for me is that while watching a live broadcast of a sporting event, I found the image presented to be very disconcerting. I felt a little nauseous in fact! A possible explanation for my discomfiture is that when I am moving through space, my brain is being fed information from all of my senses, not just my eyes. So my orientation is affected by my inner ear, for balance, by my hearing and also by the interrelation with my movements, gravity and so forth. When viewing a live 3D broadcast, I am affected only by the visual content, which is akin to looking through someone else’s eyes without being connected to the rest of their sensory input. This is particularly apparent when the images from handheld cameras are on screen. A realistic surround sound image would be helpful, but of course the soundfield is different from every visual perspective, and a live surround mix cannot possibly take all of the various perspectives in account. What is generally done, is that one perspective is chosen, and the surround matrix is built in coherence with it.

It is possible that stabilizing the cameras with gyroscopically controlled panheads will alleviate some of the discomfiture issues, but that still leaves the rest of the sensory inputs to be concerned with. To my knowledge, there have been no definitive studies published on the effect of surround audio in combination with 3D imagery in terms of image stability. There have certainly been papers presented regarding localization of sound in a surround matrix, especially in respect to video game production. Studies indicate that audio information presented in 3D improves reaction time, as noted in certain high stress environments such as airplane cockpits.[1] Will accurate 3D audio reproduction have a positive effect on viewer reaction to 3D disorientation? It has yet to be seen.

3D technology is still relatively new, and is being studied and improved upon constantly. Perhaps the answer may be to place the viewer in the center of a holographic matrix where one could possibly have a better connection between motor control and perception.

Include Footnote Link (PDF) - Psyko Audio Labs , Surround Technologies for headphones.

I more or less cited this article when talking about the correlation between 3D audio and reaction time. I have noticed that when mixing for TV - by having the various "instructions" (director/producer, etc) come from different speakers in different locations, I can react faster and understand better.

Daniel Littwin , director New York Digital.

contact: daniel.nydigital@gmail.com

São Paulo, Brazil

Mentioned:

NAB convention in Las Vegas

Mixing and Live Audio Acquisition For Television Sports

for television sport presents several changes

Maintaining a consistent sound level while presenting a dynamic fast paced event.

Keeping the audio coherent with the on-screen action.

Signal routing for transmission, replay, resale and audio.

Maintaining signal integrity, continuity and lip synch whether in mono stereo or surround.

Creating and monitoring multiple mixes simultaneously: Surround, Stereo, Mono, router feeds, alternate mixes for international clients, etc.

Depending on the complexity of the production, an engineer has between 5 hours and 5 weeks to set up the show.

For a typical NBA game one generally has 4 hours. This type of show will: be broadcast on 1 network, involve 1 field of play, have 1 announce position and possibly an “effects feed” for an associated second language or radio broadcast.

For the US Open Tennis tournament, the total equipment set up time is more than 3 weeks. This show involves over 125 networks, 5 fields of play, separate EFX

feeds from each tennis court in surround, stereo, mono as well as ambience only, over 100 different announce cabins, multiple interview positions, internet, internal stadium webcasts, and an all-encompassing intercom system, as well as many editing facilities, etc.

Live Mix techniques, in general:

Stems – audio subgroups of the broadcast mix.

Stems facilitate ease of operation for live acquisition. Establishing international sound sub-mixes, creating IFB

mixes and router feeds are all made easier through the use of stems. For editing and rebroadcast they are essential to recreate the sound of the show quickly and easily while allowing the editor to change segment lengths. Operationally, the subgroups are sent to DAs.

The signals are then returned to the desk as inputs, to allow access to auxiliary sends and to allow level manipulation without affecting the group master gain. This is very important for enabling isolated recordings for editing purposes, signal distribution to downstream clients and off line recording for replays with audio during the live broadcast.

1 EFX - sound effects or action. US television refers to action mics as EFX mics

2 IFB - interrupted fold back

3 DA – distribution amplifier

Grouping and processing

The Announce Group:

Here, I generally use 2 levels of dynamics.

At the group level, I insert a limiter, generally at a ratio of 10:1 a threshold of -1db, a medium slow attack time and a very fast release. This limiter acts as a safety, to prevent the overall submix from over-modulating. The insert point should be prefader.

Individual channels have “soft knee” compressors at ratios of 1.8:1 with medium attack and release times. The insert point should be prefader, post filter, pre-eq, if the console allows. These compressors have the effect of enhancing speech intelligibility and of keeping the announcers more “present” in the mix. Additionally, they provide an extra gain stage, if necessary.

Equalization normally entails a high pass filter set at 75Hz, a small, narrow “bump” in the low midrange somewhere between 450Hz and 800Hz to enhance the individual voice and a somewhat wider presence peak somewhere between 2200Hz to 3200Hz for clarity. A low pass filter is used in the event of high frequency noise problems that arise during broadcast or that cannot be solved in the allotted set up time.

Most of the processing is done to enhance intelligibility. Care must be taken not to allow harshness in the announcer voices, however.

The announce group (or groups) is then sent to a DA and returned to the desk as well as routed to various listening positions throughout the UM as well as to the video router or recorders as needed.

To fix the physical location of the announcers in the stadium, I place the main stereo ambient pair in the announce cabin (or just outside) so that the stadium noise arrives at the ambient mics at the same time as it arrives in the announce mics. This gives the home viewer the illusion that they are sitting in the announce cabin, watching the game with the announcers. (See section Ambience)

Ambience:

I normally build a separate ambience or crowd group. This allows me to limit or compress the crowd mics separately for routing purposes as well as allowing the compressors for the action mics group to be affected by just the action and not be affected by crowd response.

TV sports generally, are shown from 1 perspective point, with varying views added to enhance coverage. (Golf, track and field and gymnastics are notable exceptions to this generality) The perspective is generally the announcer’s viewpoint. To place the announcers in the stadium for the home viewer, one should use a stereo coincident or near-coincident pair. My preference is a matched pair of cardioid condenser mics in an ORTF configuration. (2 matched cardioid capsules set 17cm apart, at an angle of 110 degrees.) After experimenting with x/y and m/s pairs, I have found that the ORTF seems to best mimic human hearing. I place the mics so that they do not “hear” the announcers, but the arrival time of the ambient noise at the crowd mics is the same, (or almost the same), as at the announce mics. This is of course, limited to the announce cabin.

Depending on the event I use a hard knee compressor or limiter with a ratio of 4:1, a slow attack time and a fast release. The threshold is usually 2 or 3 dB before 0.

It is important to keep a consistent sound field relative to both level and phase (position). If this perspective is changed repeatedly the sonic image presented to the home viewer will be confused.

The ambient mics also serve to mask any sudden changes made to the mix.

Action Group

All sports have specific areas of concentrated action, where points are scored, where plays transition from offense to defence and back, where coaches shout instruction and where players communicate amongst themselves. It is relatively easy to aim microphones at these areas of interest. The audio mixer must choose the correct microphone for each specific area of play appropriate to the event and the setting.

I normally compress this group at a ratio of 4:1, a slight soft knee curve, slow attack, fast release times and a threshold of 3dB before 0.

I tend to classify the specific desirable sounds heard in most sports into 2 categories:

1) Thumps – low midrange (somewhere between 400Hz and 750Hz) ground contact, ball sounds, and physical contact.

and

2) Presence – midrange (somewhere between 1,25KHz and 4.5KHz) definition, voices, squeaks, pops and clicks.

By choosing the correct microphone, one can minimize the amount of equalization needed. In practice, however the perfect microphone is often not available and eq needs to be added to the channel signal. Too much emphasis or de-emphasis of a particular frequency can indicate problems: the monitoring in the audio mix room, a recurrent frequency in the arena, PA system equalization or other issues.

Following the action:

Most changes in the mix balance need to be fairly abrupt as the play moves around the field. To minimize the effect of rapidly opening and closing microphones, keeping a consistent tonal and level balance from channel to channel is essential. Select the primary or most important single source; optimize the sound and then balance the other sources to match. When mixing, I transition by leaving a mic open until the next source is also open, then the previous source can be faded out. This must be done almost instantaneously. When combined with the sound field established by the main ambient pair, the transitions are no longer apparent. The home viewers are unaware that anything is being altered in the mix balance; they simply hear the sounds that match the pictures.

Certain mics can be compressed for extra emphasis. Coach mics for example or any other sources that will isolate vocal responses. Other primary source mics may be suitably enhanced by compression. Compression may also be a necessity for microphones that will be isolated to the router and/or to tape machines. (see section The Routing Switcher)

Caution and restraint should be exercised in equalization and processing, however. Otherwise, the mix could become strident and unpleasant and/or the announcers will be masked by the game sounds. Overemphasis of any particular frequency band can also lead to transmission issues such as overlimiting and distortion. Overprocessing will lead to listener fatigue, and make the presentation unpleasant to listen to.

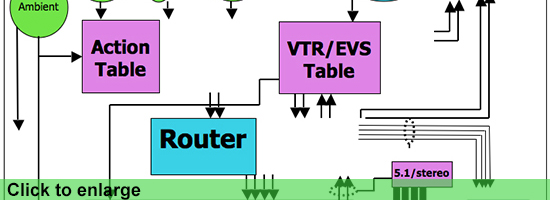

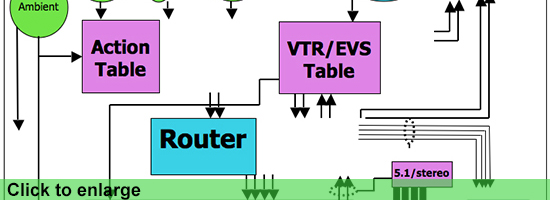

The Router:

The routing switcher is one of the most important tools in the mobile unit. Many different elements can be added to the show to enhance production choices and capabilities. By isolating reporter and interview positions, camera mics, and other possible replay sources it is possible to give the home viewer a unique perspective using replays with audio.

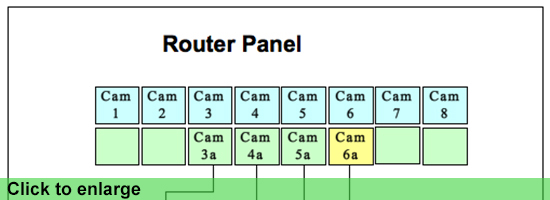

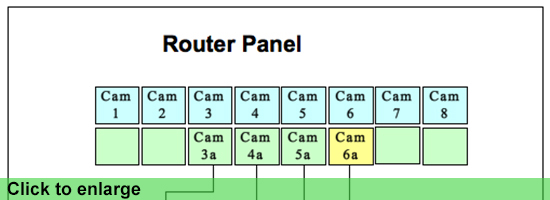

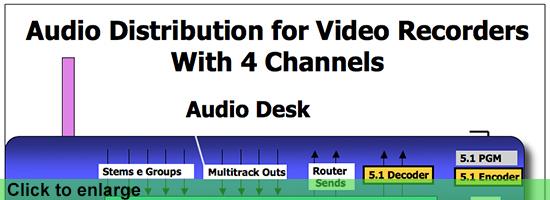

Depending on the routing switcher and control panel a variety of signals are available to the video tape operator. These are usually organized by video sources. In the case of a stereo show utilizing VTR’s with 4 audio channels for example: All the camera source buttons are programmed with an action/ambient stereo mix on channels 1 - 2 and a full program mix on channels 3 - 4. Another row of buttons presents video for the handheld cameras matched with an iso of the associated microphone on both channels 1 & 2. Additionally there are several other mixes generated from the console’s aux busses for prefade reporter mics, various interview positions and at least 1 extra stereo aux mix just in case it is needed. Router audio signals can be generated from groups, auxes, console direct outputs, satellite return feeds, and perhaps a betacam to access ENG or EFP footage gathered during the event. (see image below)

Router Panel

Router Panel

In the example shown above, assuming a 4 channel audio configuration, the top level of (blue) buttons are programmed so that channels 1-2 are a mix of the action and the ambience mics and channels 3-4 are a full mix of stereo program. The lower level of (green) buttons are programmed so that channels 1-2 receive the individual camera’s shotgun mic and channels 3-4 are a full mix of stereo program. The (yellow) button for camera 6 is an audio-only button programmed so that channels 1-2 are the pre-fade reporter’s mic.

For surround shows router configurations are of course, more complicated. In practice, audio elements are configured as stereo pairs and recombined in the mix desk prior to transmission. A surround synthesizer can be inserted across a stereo channel or mix bus to process stereo sources for surround transmission.

The routing switcher is fed audio signals from various outputs on the desk. Group sends, aux sends, direct outputs and multitrack outputs are fed to various inputs of the router for distribution e attribution for the video equipment, transmission, etc.

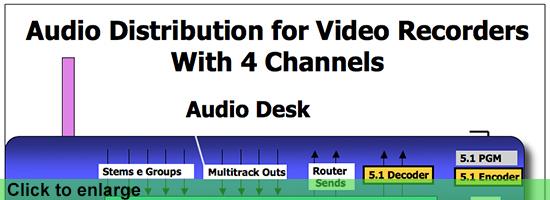

Many VTR’s are limited to 4 channels of audio. Hard disc video recorders like the EVS are often configured for only 4 audio channels as well.

When working in surround reproduction and transmission it is possible to use a surround decoder/encoder set to route a surround imaging matrix to a stereo pair. This signal is then routed through a decoder and played back as a surround source through the audio desk. This would enable a machine with a four channel configuration to be used to reproduce surround material. Of course only one playback machine would be available at any given time. (see image below) VTR + EVS">

VTR + EVS">

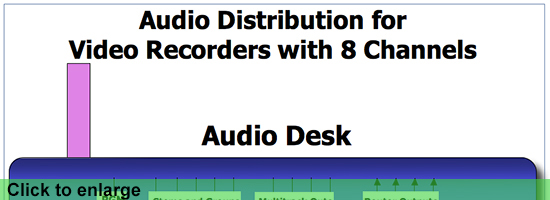

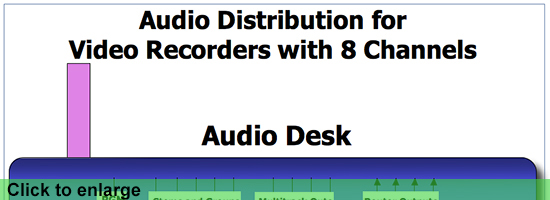

More sophisticated VTR’s and EVS recorders allow 8 channels of discrete audio recording.

It is therefore much simpler to maintain surround signal integrity in a live broadcast situation.

(see image below)

Microphones Positions for Specific Sports:

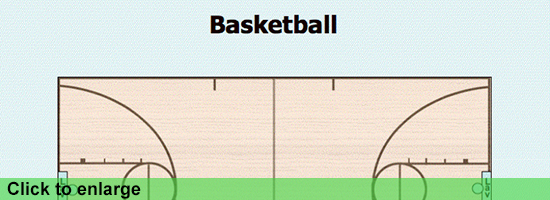

Basketball

Basketball is most often played and telecast from an indoor arena with a hardwood floor, concrete walls and (hopefully) thousands of animated fans. Indoor sports arenas are generally very reverberant and often any acoustic problems are exacerbated by using PA systems that are much louder than they need to be.

Lapel mics (Sony ECM 77’s or similar) are mounted behind the baskets, on the backboards, in the rubber frame that surround the edge. A short shotgun mic (Sennheiser 416 or similar) is mounted on the stanchion pointed at the free-throw line and a long shotgun (Sennheiser 816) is mounted on the handle of the handheld camera behind the end zone. If there is a handheld camera at midcourt it also would have a long shotgun. An ORTF pair is mounted at midcourt.

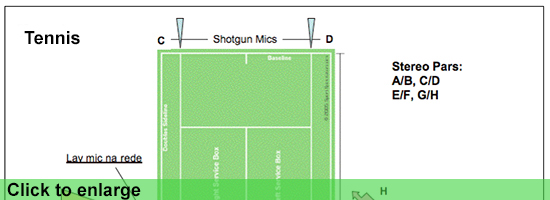

Tennis

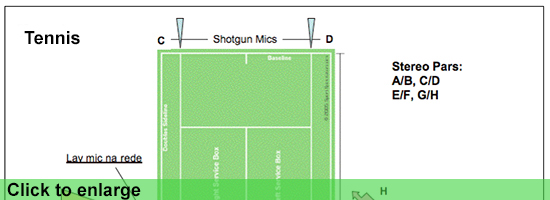

Tennis is unusual in that although the action is oriented side to side like most other sports, the perspective presented by television is from one end. Therefore the stereo perspective is perpendicular to the net rather than parallel.

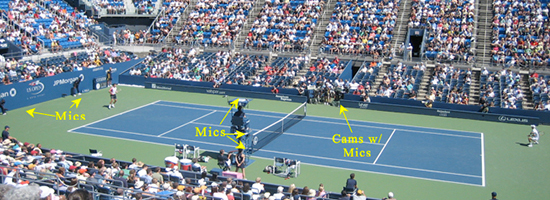

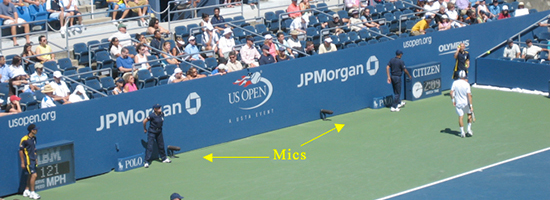

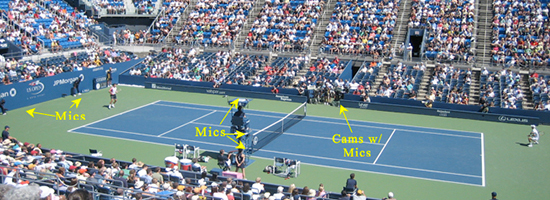

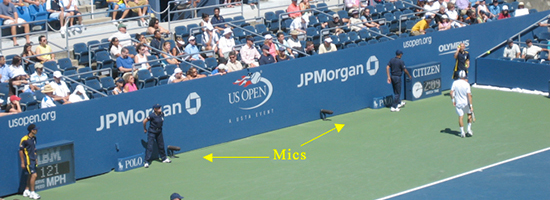

A stereo pair is set in the announce booth and another pair is mounted on the umpire’s chair to capture crowd and ambience sounds. A lapel mic is laced into the net. 4 short shotguns are mounted on short mic stands behind each baseline. Shotguns are also mounted on courtside cameras and beneath the umpire’s chair.

US Open Tennis Championship, 2007 Louis Armstrong Stadium, USA

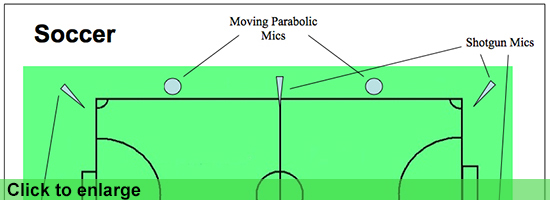

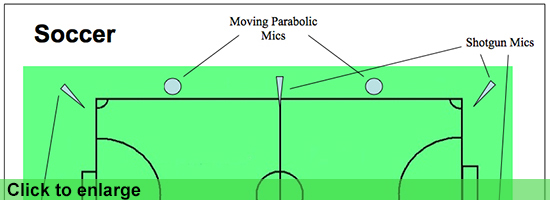

Soccer

To cover soccer (football) a combination of operated and stationary mics are used. I place an ORTF pair in the announce cabin and use long shotguns mounted on tall poles for the response of the cheering sections.

Opposite the team benches are two mobile operated mics. All cameras on the field have shotgun mics. The difficulty lies in trying to capture sounds from a great distance. Compression can help accentuate individual mics. It is extremely important for the mic operators to wear headphones to monitor their equipment, to be very active and to anticipate the direction of play. Over exaggeration of sounds that are close to mics, such as corner kicks, will make the other, more distant sounds seem inaudible by comparison. A careful balance must be maintained.

Golf

One of the most challenging sports to cover is golf. The arena for the sport is huge and there are only 2 areas where sounds are easy to capture: the tees and greens. Every microphone (or pair of mics) must be available prefader with processing as a router source. For a typical golf course, this would be 18 stereo tee mics, 18 stereo green mics, 10 wireless handheld cameras with mics, 8 wireless operated shotgun mics as well as ambience and crowd mics, about 62 stereo and surround microphone sources in all. Additionally there are between 1 and 6 announce cabins, 2 to 4 wireless reporter units, a trophy presentation area, 18 VTR’s, 4 EVS and edit suites as well.

Verification of the installations with the Autor on the PGA championship, Medina, IL, USA, august 2006

This level of complexity is also common in track and field, gymnastics and Formula 1 racing. Each of these sports has specific peculiarities and challenges including:

Widely varying sound pressure levels,

Difficulties with microphone placement

Difficulties with signal transport and cable paths

Difficulties with weatherproofing

Multiple events occurring simultaneously

Many channels of wired and wireless mics and electric points

Many channels of wired and wireless intercommunications

Interconnections between analog, digital, copper and fiber optics

Facilities and crews that are shared between different broadcasters and production teams.

The process is never simple. Audio production for television requires significant planning in advance, flexibility on site, troubleshooting and rapid decision making while confronted with many variables. However, with careful organization each broadcast can be presented in its entirety with the accuracy and creativity that will create a sense of realism and excitement for the home viewer.

Daniel Littwin , director New York Digital.

contact: daniel.nydigital@gmail.com

São Paulo, Brazil

Mentioned:

ORTF configuration

EVS

Sony ECM 77

Sennheiser MKH 416

Sennheiser MKH 816

Using Bidirectional or Figure 8 Microphones

for television sport presents several changes

Maintaining a consistent sound level while presenting a dynamic fast paced event.

Keeping the audio coherent with the on-screen action.

Signal routing for transmission, replay, resale and audio.

Maintaining signal integrity, continuity and lip synch whether in mono stereo or surround.

Creating and monitoring multiple mixes simultaneously: Surround, Stereo, Mono, router feeds, alternate mixes for international clients, etc.

Depending on the complexity of the production, an engineer has between 5 hours and 5 weeks to set up the show.

For a typical NBA game one generally has 4 hours. This type of show will: be broadcast on 1 network, involve 1 field of play, have 1 announce position and possibly an “effects feed” for an associated second language or radio broadcast.

For the US Open Tennis tournament, the total equipment set up time is more than 3 weeks. This show involves over 125 networks, 5 fields of play, separate EFX

feeds from each tennis court in surround, stereo, mono as well as ambience only, over 100 different announce cabins, multiple interview positions, internet, internal stadium webcasts, and an all-encompassing intercom system, as well as many editing facilities, etc.

Live Mix techniques, in general:

Stems – audio subgroups of the broadcast mix.

Stems facilitate ease of operation for live acquisition. Establishing international sound sub-mixes, creating IFB

mixes and router feeds are all made easier through the use of stems. For editing and rebroadcast they are essential to recreate the sound of the show quickly and easily while allowing the editor to change segment lengths. Operationally, the subgroups are sent to DAs.

The signals are then returned to the desk as inputs, to allow access to auxiliary sends and to allow level manipulation without affecting the group master gain. This is very important for enabling isolated recordings for editing purposes, signal distribution to downstream clients and off line recording for replays with audio during the live broadcast.

1 EFX - sound effects or action. US television refers to action mics as EFX mics

2 IFB - interrupted fold back

3 DA – distribution amplifier

Grouping and processing

The Announce Group:

Here, I generally use 2 levels of dynamics.

At the group level, I insert a limiter, generally at a ratio of 10:1 a threshold of -1db, a medium slow attack time and a very fast release. This limiter acts as a safety, to prevent the overall submix from over-modulating. The insert point should be prefader.

Individual channels have “soft knee” compressors at ratios of 1.8:1 with medium attack and release times. The insert point should be prefader, post filter, pre-eq, if the console allows. These compressors have the effect of enhancing speech intelligibility and of keeping the announcers more “present” in the mix. Additionally, they provide an extra gain stage, if necessary.

Equalization normally entails a high pass filter set at 75Hz, a small, narrow “bump” in the low midrange somewhere between 450Hz and 800Hz to enhance the individual voice and a somewhat wider presence peak somewhere between 2200Hz to 3200Hz for clarity. A low pass filter is used in the event of high frequency noise problems that arise during broadcast or that cannot be solved in the allotted set up time.

Most of the processing is done to enhance intelligibility. Care must be taken not to allow harshness in the announcer voices, however.

The announce group (or groups) is then sent to a DA and returned to the desk as well as routed to various listening positions throughout the UM as well as to the video router or recorders as needed.

To fix the physical location of the announcers in the stadium, I place the main stereo ambient pair in the announce cabin (or just outside) so that the stadium noise arrives at the ambient mics at the same time as it arrives in the announce mics. This gives the home viewer the illusion that they are sitting in the announce cabin, watching the game with the announcers. (See section Ambience)

Ambience:

I normally build a separate ambience or crowd group. This allows me to limit or compress the crowd mics separately for routing purposes as well as allowing the compressors for the action mics group to be affected by just the action and not be affected by crowd response.

TV sports generally, are shown from 1 perspective point, with varying views added to enhance coverage. (Golf, track and field and gymnastics are notable exceptions to this generality) The perspective is generally the announcer’s viewpoint. To place the announcers in the stadium for the home viewer, one should use a stereo coincident or near-coincident pair. My preference is a matched pair of cardioid condenser mics in an ORTF configuration. (2 matched cardioid capsules set 17cm apart, at an angle of 110 degrees.) After experimenting with x/y and m/s pairs, I have found that the ORTF seems to best mimic human hearing. I place the mics so that they do not “hear” the announcers, but the arrival time of the ambient noise at the crowd mics is the same, (or almost the same), as at the announce mics. This is of course, limited to the announce cabin.

Depending on the event I use a hard knee compressor or limiter with a ratio of 4:1, a slow attack time and a fast release. The threshold is usually 2 or 3 dB before 0.

It is important to keep a consistent sound field relative to both level and phase (position). If this perspective is changed repeatedly the sonic image presented to the home viewer will be confused.

The ambient mics also serve to mask any sudden changes made to the mix.

Action Group

All sports have specific areas of concentrated action, where points are scored, where plays transition from offense to defence and back, where coaches shout instruction and where players communicate amongst themselves. It is relatively easy to aim microphones at these areas of interest. The audio mixer must choose the correct microphone for each specific area of play appropriate to the event and the setting.

I normally compress this group at a ratio of 4:1, a slight soft knee curve, slow attack, fast release times and a threshold of 3dB before 0.

I tend to classify the specific desirable sounds heard in most sports into 2 categories:

1) Thumps – low midrange (somewhere between 400Hz and 750Hz) ground contact, ball sounds, and physical contact.

and

2) Presence – midrange (somewhere between 1,25KHz and 4.5KHz) definition, voices, squeaks, pops and clicks.

By choosing the correct microphone, one can minimize the amount of equalization needed. In practice, however the perfect microphone is often not available and eq needs to be added to the channel signal. Too much emphasis or de-emphasis of a particular frequency can indicate problems: the monitoring in the audio mix room, a recurrent frequency in the arena, PA system equalization or other issues.

Following the action:

Most changes in the mix balance need to be fairly abrupt as the play moves around the field. To minimize the effect of rapidly opening and closing microphones, keeping a consistent tonal and level balance from channel to channel is essential. Select the primary or most important single source; optimize the sound and then balance the other sources to match. When mixing, I transition by leaving a mic open until the next source is also open, then the previous source can be faded out. This must be done almost instantaneously. When combined with the sound field established by the main ambient pair, the transitions are no longer apparent. The home viewers are unaware that anything is being altered in the mix balance; they simply hear the sounds that match the pictures.

Certain mics can be compressed for extra emphasis. Coach mics for example or any other sources that will isolate vocal responses. Other primary source mics may be suitably enhanced by compression. Compression may also be a necessity for microphones that will be isolated to the router and/or to tape machines. (see section The Routing Switcher)

Caution and restraint should be exercised in equalization and processing, however. Otherwise, the mix could become strident and unpleasant and/or the announcers will be masked by the game sounds. Overemphasis of any particular frequency band can also lead to transmission issues such as overlimiting and distortion. Overprocessing will lead to listener fatigue, and make the presentation unpleasant to listen to.

The Router:

The routing switcher is one of the most important tools in the mobile unit. Many different elements can be added to the show to enhance production choices and capabilities. By isolating reporter and interview positions, camera mics, and other possible replay sources it is possible to give the home viewer a unique perspective using replays with audio.

Depending on the routing switcher and control panel a variety of signals are available to the video tape operator. These are usually organized by video sources. In the case of a stereo show utilizing VTR’s with 4 audio channels for example: All the camera source buttons are programmed with an action/ambient stereo mix on channels 1 - 2 and a full program mix on channels 3 - 4. Another row of buttons presents video for the handheld cameras matched with an iso of the associated microphone on both channels 1 & 2. Additionally there are several other mixes generated from the console’s aux busses for prefade reporter mics, various interview positions and at least 1 extra stereo aux mix just in case it is needed. Router audio signals can be generated from groups, auxes, console direct outputs, satellite return feeds, and perhaps a betacam to access ENG or EFP footage gathered during the event. (see image below)

Router Panel

Router Panel

In the example shown above, assuming a 4 channel audio configuration, the top level of (blue) buttons are programmed so that channels 1-2 are a mix of the action and the ambience mics and channels 3-4 are a full mix of stereo program. The lower level of (green) buttons are programmed so that channels 1-2 receive the individual camera’s shotgun mic and channels 3-4 are a full mix of stereo program. The (yellow) button for camera 6 is an audio-only button programmed so that channels 1-2 are the pre-fade reporter’s mic.

For surround shows router configurations are of course, more complicated. In practice, audio elements are configured as stereo pairs and recombined in the mix desk prior to transmission. A surround synthesizer can be inserted across a stereo channel or mix bus to process stereo sources for surround transmission.

The routing switcher is fed audio signals from various outputs on the desk. Group sends, aux sends, direct outputs and multitrack outputs are fed to various inputs of the router for distribution e attribution for the video equipment, transmission, etc.

Many VTR’s are limited to 4 channels of audio. Hard disc video recorders like the EVS are often configured for only 4 audio channels as well.

When working in surround reproduction and transmission it is possible to use a surround decoder/encoder set to route a surround imaging matrix to a stereo pair. This signal is then routed through a decoder and played back as a surround source through the audio desk. This would enable a machine with a four channel configuration to be used to reproduce surround material. Of course only one playback machine would be available at any given time. (see image below) VTR + EVS">

VTR + EVS">

More sophisticated VTR’s and EVS recorders allow 8 channels of discrete audio recording.

It is therefore much simpler to maintain surround signal integrity in a live broadcast situation.

(see image below)

Microphones Positions for Specific Sports:

Basketball

Basketball is most often played and telecast from an indoor arena with a hardwood floor, concrete walls and (hopefully) thousands of animated fans. Indoor sports arenas are generally very reverberant and often any acoustic problems are exacerbated by using PA systems that are much louder than they need to be.

Lapel mics (Sony ECM 77’s or similar) are mounted behind the baskets, on the backboards, in the rubber frame that surround the edge. A short shotgun mic (Sennheiser 416 or similar) is mounted on the stanchion pointed at the free-throw line and a long shotgun (Sennheiser 816) is mounted on the handle of the handheld camera behind the end zone. If there is a handheld camera at midcourt it also would have a long shotgun. An ORTF pair is mounted at midcourt.

Tennis

Tennis is unusual in that although the action is oriented side to side like most other sports, the perspective presented by television is from one end. Therefore the stereo perspective is perpendicular to the net rather than parallel.

A stereo pair is set in the announce booth and another pair is mounted on the umpire’s chair to capture crowd and ambience sounds. A lapel mic is laced into the net. 4 short shotguns are mounted on short mic stands behind each baseline. Shotguns are also mounted on courtside cameras and beneath the umpire’s chair.

US Open Tennis Championship, 2007 Louis Armstrong Stadium, USA

Soccer

To cover soccer (football) a combination of operated and stationary mics are used. I place an ORTF pair in the announce cabin and use long shotguns mounted on tall poles for the response of the cheering sections.

Opposite the team benches are two mobile operated mics. All cameras on the field have shotgun mics. The difficulty lies in trying to capture sounds from a great distance. Compression can help accentuate individual mics. It is extremely important for the mic operators to wear headphones to monitor their equipment, to be very active and to anticipate the direction of play. Over exaggeration of sounds that are close to mics, such as corner kicks, will make the other, more distant sounds seem inaudible by comparison. A careful balance must be maintained.

Golf

One of the most challenging sports to cover is golf. The arena for the sport is huge and there are only 2 areas where sounds are easy to capture: the tees and greens. Every microphone (or pair of mics) must be available prefader with processing as a router source. For a typical golf course, this would be 18 stereo tee mics, 18 stereo green mics, 10 wireless handheld cameras with mics, 8 wireless operated shotgun mics as well as ambience and crowd mics, about 62 stereo and surround microphone sources in all. Additionally there are between 1 and 6 announce cabins, 2 to 4 wireless reporter units, a trophy presentation area, 18 VTR’s, 4 EVS and edit suites as well.

Verification of the installations with the Autor on the PGA championship, Medina, IL, USA, august 2006

This level of complexity is also common in track and field, gymnastics and Formula 1 racing. Each of these sports has specific peculiarities and challenges including:

Widely varying sound pressure levels,

Difficulties with microphone placement

Difficulties with signal transport and cable paths

Difficulties with weatherproofing

Multiple events occurring simultaneously

Many channels of wired and wireless mics and electric points

Many channels of wired and wireless intercommunications

Interconnections between analog, digital, copper and fiber optics

Facilities and crews that are shared between different broadcasters and production teams.

The process is never simple. Audio production for television requires significant planning in advance, flexibility on site, troubleshooting and rapid decision making while confronted with many variables. However, with careful organization each broadcast can be presented in its entirety with the accuracy and creativity that will create a sense of realism and excitement for the home viewer.

Daniel Littwin , director New York Digital.

contact: daniel.nydigital@gmail.com

São Paulo, Brazil

Mentioned:

ORTF configuration

EVS

Sony ECM 77

Sennheiser MKH 416

Sennheiser MKH 816

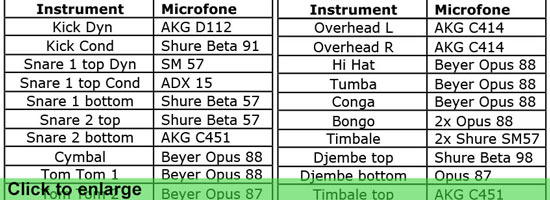

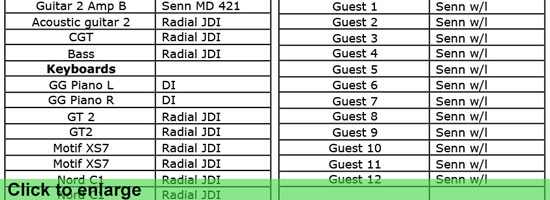

Mounting a Gala Show in Radio City

The Artist:

Gigi D’Alessio is very popular in Italy. He is also known in Brasil for having recorded “Um Coração Apaixonado", with Wanessa Camargo. The show at Radio City included guests Liza Minnelli, Paul Anka, Sylvester Stallone, Anastacia, Manhattan Transfer, Mario Biondi, Christian De Sica, Enrico Brignano, Benvenuti & Griffith, Valeria Marini, & Ivete Sangalo. His band: 2 guitars, bass, drums, percussion, piano, keyboards and a horn section, was supplemented by a small string orchestra of about 40 New York musicians. A dance troop of 12 persons was also part of the performance.

Technical Details:

The show was recorded in HD, 1080i and surround by All Mobile Video’s Crossroads OB unit for editing and transmission the following week in Italy. Richard Wirth directed the cameras. He and Lenny Laxer of AMV managed the technical aspects of the production. Twelve Sony HD1000 or HD1500 cameras were used, including two jibs, two fixed cameras, a remote controlled track-mounted robotic camera on a crane, a hand-held camera and a steadicam. The backdrop was an enormous (25m x 31m) HD LED screen that was provided by Radio City. This screen was fed with live images from the cameras as well as prerecorded scenes and images, all sequenced and timed to the show and controlled by a computer. The “line cut” and all of the cameras and images were individually recorded. Over one hundred seventy two audio channels were recorded, including the microphones used to capture the ambience and microphones mounted on the moving cameras. All of these microphones were mixed live as well as recorded individually in Protools for remixing in postproduction. Microphones and effect groups were created and organized by instrument type which were then sent to the Studer Vista 8 mixing desk in the OB UNIT. Certain key performance mics, like the lead vocal and certain soloists were sent individually. All audio routing in the OB unit originated in the Studer Vista 8.

Because of time and budget constraints we never had a full dress rehearsal. Richard, our director used a series of coverage zones for the cameras, similar to a multi-camera film shoot, so that each camera had a specific area to concentrate on. One camera covered the star, Gigi at all times. Other cameras covered the dance routines and guest artists. This ensured that all aspects of the performance could be video taped for use in post-production, if necessary. Stage entrances were pre-planned so that each guest could be shown entering and leaving the stage. Occasionally, these were altered at the last minute which caused some consternation in the OB unit. However, clear communication and fast thinking always saved the shots.

Intercommunications:

The intercommunications system was an RTS/Telex Adams digital matrix system with 144 inputs and outputs. In the theater, 12 channels of wireless intercommunication were used in addition to 26 wired stations and all the camera intercoms. All key personnel in the OB unit had 32 channel digital key panels.

Setting Up

The show was scheduled to start at 19:30 on Monday, 14 Feb. Set up started two days earlier at 08:00. We were allotted 10 hours on Saturday to install all of the equipment. On Sunday we had another 10 hours for rehearsals of specific camera angles for dance routines and specific timing issues as well as some fine-tuning of the equipment.

The main lighting array was installed first. The PA system was installed next. It was an i-Series line array, provided by Clair. The house audio desk was a Protools Venue. The monitor audio desks were Digico SD8’s. Protools HD was used to record the show. Word clock was derived from the video master clock on the mobile unit, which also provided time code. As the show was for broadcast in Italy, all of the video and time code references were PAL, based on 25 frames/second. The audio tracks were recorded at 48K/24 bit.

The client provided us with a wish list, which we translated (sometimes literally from Italian into English) into our technical requirements. Intercommunications systems are always essential: often overlooked, until there is a problem, but if nobody can communicate, the show will NOT “go on”. In this case, someone would usually say or write, for example, that “Roberto” needed to speak with “Julio”, “Annette” and “Richard”. We would have to determine who these people were, what they did and what language they spoke. This in and of itself was a challenge as there was no complete crew list of both technical and production people and nobody wore nametags. We would then create the necessary channel in the matrix software and label it accordingly. Modern intercommunications systems are both software and hardware based, so not only does the computer need to be programmed correctly, but also the hardware needs to be adjusted and balanced for optimum performance. In a live event, there is only one chance to capture the moment, so correct equipment preparation is of the utmost importance.

The sound reinforcement crew and the artist determined all the microphone selections and positions. All wireless frequency allocation was determined on site by using “Shure Wireless Workbench™” software. The microphones, IFB’s, and wireless intercoms were coordinated to provide the most reliable performance without radio frequency interference. As there were 38 wireless mics, 24 wireless monitors and 12 channels of wireless intercom, as well as literally hundreds of walkie-talkies, this coordination was critical to the success of the show.

The intercommunications system had to accommodate both English and Italian speakers, sometimes sharing the same channels. Stage managers needed to speak with the artists, the Italian production staff and the American technical staff.

The television and camera crew all spoke English, as did the director. However, the producer, lighting designer, choreographer and musical director were all Italian. A great deal of patience was needed (as well as hand gestures). One interesting challenge was to keep the critical technical conversations in English separate from the creative/production conversations in Italian. All departments were assigned their own channels for communication; lighting, PA, TV audio, house coordination, video, camera, engineering and two channels for production.

A note regarding labels and identification: as the technical staff was mostly American and the production staff was mostly Italian all of the equipment needed to be labeled in both languages. This was especially critical for communications. Only a very few people were fluent in both English and Italian.

Video Tape

All cameras and images were recorded individually to videotape. All machines were fed with timecode referenced to time of day. Every machine received a stereo mix of the program. All of the machines recording moveable cameras were fed with the particular camera’s associated microfone, copied to two channels. Certain image sequences were also recorded to EVS video hard disk systems in order to create playlists that were later recorded to VTR’s. Additionally, several HD camcorders were used to capture ambience from backstage, the audience and on the street.

Videotape ran continuously. All recordings were started at different times, so that overlaps could be recorded in EVS hard disk recorders and then transferred to videotape with the original timecode. All tapes were 1 hour in length. With a total of 16 recorders it would be impossible to change all tapes at once and keep a continuous recording of every source. So this system of overlaps was implemented. Using longer videotapes is not generally advisable as longer tapes are thinner and more subject to damage.

Multitrack Audio

Every input source on stage was captured separately and recorded to a Protools HD system. Most of the signals were derived from the FOH audio desk. Ambience and audience microfones were recorded directly to the Protools HD system, using separate microfone preamps.

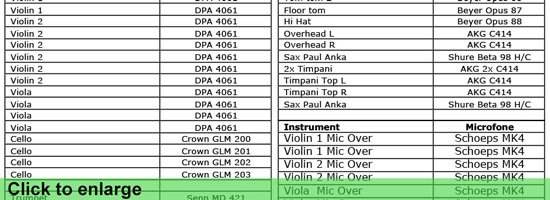

Here is a list of all of the microfones used in recording:

The antennas, receivers and transmitters for the wireless microphones and in-ears were located next to the monitor audio desk, backstage left. Most of the interface between the PA system and the OB unit was located here as well. To ensure better RF separation we placed the wireless intercom on the opposite side of the backstage area. This allowed for greater flexibility in focusing the antennas for better coverage and better access to the equipment for programming and maintenance.

Every frequency of every channel had to be changed to fit into the available spectrum.

Very often, on larger shows frequency coordination is handled in advance: every major venue in the USA has been “mapped” to show what spectra are available. It is therefore merely a matter of cross checking the available frequencies against what your equipment is capable of. All that is required is a list of the available gear.

Intermodulation and illegal frequency and power use is fairly common, so an RF spectrum analyzer is used to confirm the available space. The equipment is then fine adjusted on site. In the case of this show, all frequency coordination and adjustment was done on site, which took a considerable amount of time.

Radio City Music Hall is a “union” house. The entire technical crew are members of

IATSE, the International Association of Stage and Theatrical Employees. The rules are fairly rigid, but the crew at Radio City are extremely competent, helpful and generally friendly. It can be intimidating to work at such a famous facility, but with patience and respect to the regulations of the theatre, Radio City is one of the best places in the world to work. The attention to detail and personal safety is both impressive and reassuring. For example, when the lighting grid is about to be lowered, a horn sounds and ALL work stops. This reduces confusion, allows the appropriate personnel to work quickly and efficiently and prevents injuries due to falling objects or heavy cables.

Radio City is renowned for its “stagecraft” Every part of the stage is on a hydraulic lift, so that entire sets can appear from and disappear into the floor, as if by magic.

Large objects, even trucks and elephants can be rolled, lifted into the ceiling, or flown across the stage. Of course our show did not include any flying elephants, but they would have been happily accommodated for, had they been needed for the production.

However, the capabilities of the house meant that we were able to build a very large show in about 16 hours, with an orchestra, band, dancers, special guests, complicated lighting and scenery cues, a large PA system, all of which had to be integrated with the High Definition OB unit with the Grass Valley Kalypso, Studer Vista 8, intercom system, and HD television cameras. Disassembling the show was also made easier by having such capabilities.

All of the necessary configurations were saved in all of the various mixing desks and recalled as each different pieces of music required. Lighting cues and video screen presentations were also preset. Camera shots were mostly handled manually, with a few presets on particular cameras for finding focus when the lights were out. Video settings for each camera were preset and then adjusted manually as required by particular shots. Cameras had to be maintained in correct adjustment constantly, as each camera was being recorded individually at all times.

The Show

The show was presented as one continuous performance without an intermission. Italian comedian Enrico Brignano provided some routines between musical segments. With all the performers and comedic intervals the entire show lasted about three hours, including the encores. The show was recorded with extensive notes and then edited in Italy for presentation the following week. The presentation was also to be made available for DVD release in Europe.

Daniel Littwin , director New York Digital.

contact: daniel.nydigital@gmail.com

São Paulo, Brazil

Maintaining Intelligibility in Principle Mix Sources

This is my first blog entry. So before I get to my subject, I’d ike to introduce myself. I am Danny Littwin, an American television tech manager, audio engineer and musician residing in São Paulo, Brazil. Before moving here in 2007, I was a US television freelancer, work that I still continue today occasionally. Most of my career has been as an audio engineer. I have mixed just about every kind of event imaginable, from live televised sports events to large orchestral presentations, from ice hockey to pop music and from football to traditional Irish music. For my efforts and through a combination of good timing and good luck I have received several awards: 6 Emmys, 3 Grammys and a bunch of nominations.

I think it is not so much the awards that count, but rather the fact that I have been able to help my clients and colleagues attain their artistic and commercial visions through my experience and intuition. TV is a great business to be in, as most of the people you work with are there because they enjoy it. You could certainly make more money as commodities trader, but then what fun is that? Some of my favorite memories are getting calls from my grandmother, telling me she saw my name in the credits.

Before I was a tech manager and director of a broadcast services company, I was an audio engineer. So today’s entry will deal with an interesting audio topic:

Maintaining Intelligibility in Principle Mix Sources

The Broadcast Brazil Column will be written by Daniel Littwin

How can one maintain clarity in the primary point(s) of focus of a mix? Whether that focus is a news anchor, sports announcer, lead vocalist or instrumental ensemble, it is necessary to hear the main performer clearly and in proper perspective.

There are many tools available to audio engineers to ensure that this perspective is achieved:

- Microphone position

- Choice of microphone

- Isolation mounts for microphones

- Gain and balance throughout the signal path

- Selection of specific equipment:

- Solid state or tube mic preamps, transformer, transformerless, etc.

- Use of signal processing:

- Equalization, dynamics, effects devices, etc.

- Pan position and mix balance.

There are many mitigating factors and potential problems:

- How many sources are in the mix?

- How many of the sources are microphones?

- Do all the microphones share the same space/time perspective?

- Is this a live event or a multitrack/multilevel recording?

- Is there a substantial amount of background noise?

- How reverberant is the environment?

- Are there competing points of interest?

- Will this be reassembled in post-production or broadcast live or both?

- Are there multiple mixing desks in operation? i.e.: transmission, recording and PA

- What is the final destination of the product?

- Radio or TV Broadcast?

- Mono, stereo or surround?

- What are the parameters of the metadata at the transmitter?

- What are the parameters of the limiters at the transmitter?

- CD, DVD or Video Game?

- Is metadata being recorded?

- What parameters are specified?

- Internet streaming or MP3?

- What bit rate?

- What is the record media?

- Hard drive, solid state or tape-based digital media?

- Analog tape

- With or without noise reduction?

- What tape speed is being used?

These are some of the factors that must enter into an engineer’s calculations.

Distortion

It is extremely important to keep the signal path free of distortion, whether from mechanical noise, or from electronic overloads. Accurate monitoring, metering and close attention is required to prevent distortions from entering the signal path. A single distorted signal can affect other clean signals detrimentally. Distortion becomes additive as well, in that the net effect of two or more distorted signals is worse than one signal on its own. Small bits of distortion at the beginning of a microphone’s signal path have an increasingly destructive effect on the final mix output.

This could be likened to listening to a stereo system where one speaker is slightly distorted: the sound is unpleasant, yet the overall effect is somewhat diminished when the other speaker is functioning normally. If both speakers are distorted, the system is extremely unpleasant if not impossible to listen to. However, if even only one speaker is distorted it still detracts from the response of the other speaker and from the sound of the system as a whole.

The dynamic range of a given signal may be too large to be processed by recording equipment and the corresponding media. Even though modern digital equipment is capable of dynamic ranges exceeding 110dB, unexpected variations of levels from synthesizers, crowd reactions or heavily amplified and processed instruments can cause even the best quality analog-to-digital converters to distort. Careful input adjustment, and judicious use of compression and/or limiting will prevent distortion.

Digital Versus Analog Distortion

Analog distortion can actually be used as a desirable effect. Analog distortion bears an inverse resemblance to the original audio signal, in that as more distortion is added, the signal waveform becomes less recognizable. Digital distortion is undesirable, as it bears no resemblance to the original signal. Furthermore, digital distortion is absolute above a given threshold and non-existent below that threshold. This also means that in response to a sudden transient, a signal can be distorted and unusable once the dynamic range of the system is surpassed.

Tape saturation, valve saturation and output clipping are all types of compression, which can be quite pleasant if used carefully. Transformers can have an effect similar to equalization, slightly emphasizing or de-emphasizing certain frequencies, depending on many factors including the core density, number of windings and wire gauge used in the transformer. There are also many digital plug-ins that emulate analog processors.

They can all be effective and complimentary if used within the dynamic range of the system.

In any mix, every signal affects every other signal. Good mixes take advantage of this phenomenon to create a new complimentary sound out of the elements presented. Distorted waveforms will combine with non-distorted signals and make the individual components of the mix appear to be corrupted. Distortion will affect not only frequency response but also signal phase as well. Therefore distortion will lead to irregularities or even a collapse of the stereo or surround image, which is based on phase information.

Data Compression, Noise Reduction and Signal Encoding/Decoding

If data compression is used it is imperative that the correct codecs be implemented. Most often this is handled automatically. In some cases it is necessary to choose. If noise reduction is used it must be consistent throughout the reproduction chain. When mixing in surround it is extremely important to manage the metadata correctly, both while encoding and decoding.

Accurate documentation is very important in maintaining signal quality. Regardless of how the information is preserved; on labels, as PDF files accompanying the data files or even as BWAV file headers, an accurate description of any relevant data will prevent mismanagement that leads to bad audio reproduction. Any signal must be decoded in coherence to how it was encoded regardless of whether that encoding is noise reduction, data compression or metadata. Good documentation facilitates proper signal management. Accurately reproducing the audio from a commercial CD is easy. Dealing with thousands of undocumented files in a myriad of formats is extremely difficult. It is good to assume that the work you do will be used by someone who has no idea of your project’s history other than what your notes indicate.

Microphone Technique

The first place to ensure intelligibility is at the very beginning of the signal chain; the microphone. Position is of primary importance. Engineers must strike a balance between two physical characteristics of microphones.

- The Inverse Square Law. The closer a microphone is to a sound source the louder that source will be in relation to the background sound by an inverse square factor. At a distance of 20 cm a source will be 4 times louder than at a distance of 40 cm. (40÷20=2. 22=4) Sound that emanates from a point source drops in level 6 dB for every 2 units of distance. So a signal of 90dB at 20cm will be 84 dB at 40cm.

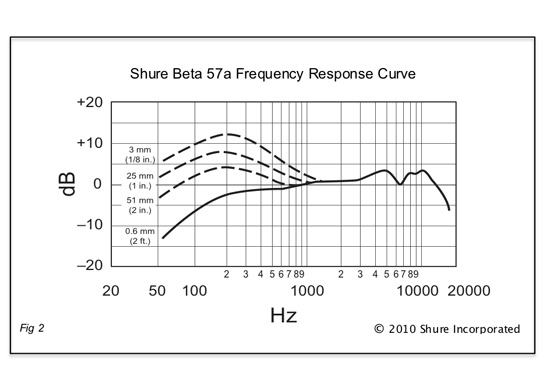

- All directional microphones are subject to the Proximity Effect, where the bass frequencies are increased in relation to the overall tonal balance. See Fig 2.

Therefore if a microphone is too far from a sound source, the source will be indistinguishable from the background noise. If a microphone is too close to a sound source it will suffer from hyperextended low frequency response, making the sound appear muffled. The challenge is to find the position where the microphone distinguishes the desired sound from the background yet does not over-enhance the low frequency components. For most dynamic cardioid mics like the Shure SM 57/58 or Beta 57/58 that distance is generally between 15 and 30 cm.

Frequency Response Curve

The proximity effect can be used to enhance the bass component of a voice. However at distances where the proximity effect becomes apparent a very small adjustment in distance will yield a very large difference in bass response. The proximity effect will also increase the apparent sensitivity to plosives. Certain consonants, especially “P” and “B”, will be over-accentuated.

Wind and vibration are other factors that will affect clarity:

If the microphone is placed in a windy environment or if the talent requires, use a windscreen.

To reduce unwanted sound due to vibration use isolation or shock mounts. Certain microphones are designed for handheld use. Others will always require isolation mounts.

Isolation mounts should be used for orchestras, ensembles, choirs, and sources that are captured from a distance. Large diaphragm microphones also should be used with shock mounts. It also should be noted that in general, the greater the directionality of a microphone the more sensitivity it has to handling and wind noise as well as to proximity effect.

Certain microphones are sensitive to diaphragm overloads. In such cases either move the microphone farther from the sound source or change the microphone. Even extremely durable microphones can develop rigidity in the diaphragm over time, which will make them prone to distortion, as they get older.

Microphone Position

Microphone position is of primary importance regardless of what kind of music or program is being presented. If a microphone is correctly positioned the sound of the instrument will be captured. If a microphone is incorrectly positioned even with a perfect signal chain the sound will be compromised.

Vocals

The human voice was the first musical instrument, so regardless of culture or category of music it is the instrument that bears the greatest scrutiny. As such, artists and producers are most apprehensive and preoccupied with the recording of vocals. Achieving a great vocal sound can be compromised by the performer’s insecurities and/or the producer’s concerns and preconceptions. Clarity in the vocal is objective; you can either hear it or you cannot. The tonal coloration however, is subject to taste, available equipment and how the vocal sound fits within the rest of the tracks (or vice versa) and the remainder of the entire production.

A very important issue for engineers while recording vocals is an overabundance of bass due to the proximity effect mentioned above. Many microphones are equipped with bass filters to ameliorate this problem. Of course when recording an operatic bass this sort of filter cannot be used and the audio operator must rely on positioning. A second common problem is distortion. Distortion is not necessarily obvious nor is it always visible on the meters. Careful listening on an accurate speaker or headphone system is required.

Instruments

Every instrument presents its own particular challenge. Every individual instrument family has general characteristics and individual instruments each have their own sonic personalities.

Some examples:

Woodwinds:

Most of the sound from a woodwind comes through the keys. The sonic character of the instrument, the harmonic overtones and also the fundamental note all radiate from the entire instrument through the keys. Only the fundamental tones and the lowest note emanate from the bell. Flutes are the exception with the majority of the sound coming from the mouthpiece.

Horns develop their sounds from the bell.

Strings form their sounds from the bridge of the instrument. In the case of the violin family, it is a good idea to aim the microphone at both the bridge and the sound post. For guitars, the sound originates from the bridge and radiates outward across the soundboard, or top of the instrument. The sound of the instrument does not emanate from the sound hole. The sound hole acts as a bass relief port similar to the bass port on a speaker.

One of the more interesting challenges is how to make a clean recording of a highly amplified instrument such as a distorted electric guitar. In general, one should be able hear the same sound at the amplifier as through the monitoring system. If the quality of sound remains constant regardless of the monitoring level, all should be well.

Mix balance

Assuming that all the signals are well recorded and free of distortion, clarity can be assured through both level and pan position in a mix.

Equalization is mostly used as a corrective measure. However, EQ can also be used to emphasize or deemphasize components of a mix: In a simple voice over background music mix, a trick used in American radio production is to equalize the music to deemphasize the midrange frequencies so that voice can be heard clearly while the level of the music is still apparent. A small dip (-1.5 - -4 dB) from approximately 500Hz - 3.000Hz in the music track will make an announcer’s voice much more present without sacrificing the level of the music. It is often easier and faster to apply a static process, such as equalization rather than a dynamic process such as ducking, though ducking does work very well and can be quite transparent if set up properly.

Some artists or producers prefer that the main voice or instrument be much louder than any of the other sources. In such cases it is still necessary to pay attention to clarity. A muffled voice will always sound like a muffled voice, even if it is the only thing in the mix.

Dynamics

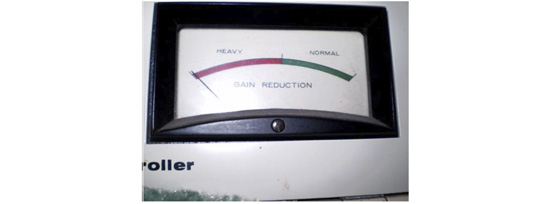

Compressors, gates and duckers can all be used to enhance clarity of a source. Limiters are considered to be mostly safety devices and are used to prevent the overall level from exceeding a given value, i.e.: -3 dBFS , though they do alter the tonal quality of the signal and as such can be used to change the tonal coloration.

____________________

1 How do Woodwind Insruments Work? J. Wolfe University of New South Wales 1994

Compressors were originally developed to reduce the dynamic range of broadcast and recorded material so it would be coherent with technology available at the time. Transmitters have dynamic range limitations; if fed with a signal that is too loud, the transmitter will be damaged. If the signal is not loud enough then the station’s broadcast coverage area will be attenuated. Originally the audio signal was fed directly into the transmitter. The only level control was a skilled operator who worked with a script or a score to anticipate volume levels and keep the equipment operating at peak efficiency.

The compressor as we know it today has its origins in the CBS Laboratories in New York and Stamford, Connecticut. The Audimax, an automatic gain controller was introduced in 1959 and the Volumax, a peak limiter, was introduced in the 1960’s. Both units were solid state electronic devices. Previous dynamics devices were tube based and were extremely expensive.

Fig 3

Fig 3 Fig 4

Fig 4

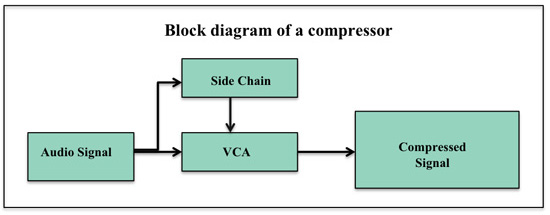

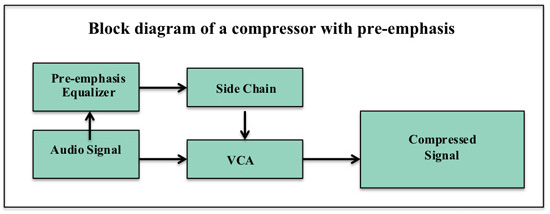

Dynamics processors function by using a control or side chain signal to determine the amount of process applied. This control signal is derived from the input or possibly from a parallel signal.

____________________

2 Decibels Relative to Full Scale

3 A short History of Transmission Audio Processing in the United States © 1992 Robert Orban

Fig 5

Compressors have the effect of accentuating whatever frequencies are most prevalent in the signal; Music tends to have more energy in the low frequency regions. Therefore over-compressing a mix will accentuate the low frequencies while masking the high frequencies, making the mix sound dull. However, this characteristic allows a compressor to be used as a de-emphasis device.

Fig 6

By exaggerating the frequencies associated with sibilance at the input to the side chain, the compressor will act on those frequencies and diminish the sibilance. By exaggerating those frequencies associated with plosives at the input to the side chain, the compressor will act on those frequencies and diminish them. Thus can a barrier to intelligibility be overcome. Over-processing can lead to listener fatigue, diminished comprehension and even distortion. An overabundance of any process, whether it is dynamics, equalization or effects can adversely affect the mix. It is absolutely preferable to make corrections early in the process; with the performance, with microphone choice and position, correct level management and so forth. This being so, there are many times when it is not possible to do so. In such cases equalization, dynamics and other processes can be applied as corrective measures. However, being aware of the optimum procedures can lessen the necessity for such corrective measure.

By carefully managing the available resources; talent or event, microphone selection and position, equipment and time, it is possible to maintain intelligibility in the components of any given mix.

Maintaining clarity requires a basic understanding of the physics of sound as applied to microphone technique, familiarity with the available equipment and in general, attention to detail.

Daniel Littwin , director New York Digital.

contact: daniel.nydigital@gmail.com

São Paulo, Brazil