Guest Columnist Rene Schaap

The first guest columnist for BroadcastBrazil

Introduction:

René Schaap works in the Broadcast industry for almost 30 years. Starting as an ENG sound-engineer in the Netherlands. The dutch company where he started stationed him at all kind of jobs and projects: ENG, EFP, Studio and Satellite Uplink what made him an engineer who could solve problems on location and finalise audiovisual projects with a 100% result.

His jobs made him work on big events like the Formula 1 and for big Broadcasters like the BBC and TV Globo Brazil. It also took him on a trip all over the planet bij these projects in all kinds of countries, and of course a lot of adventures.

Nowadays he is managing director of Connecting Media. This company is specialised in Interactive webcasting, broadcast, web and mobile. By living in Santos, he is running the Brazilian office and partly the dutch office.

René was a finalist in the documentary festival 'Curta Santos' and was 2 times nominated for a 'Streaming Media Award' with Connecting Media. read more about him on het personal blog www.reneschaap.nl.

We need more Guest Columnists at Broadcast Brazil, if you want to share your thoughts, join us.

René Schaap

More info you can find on his LinkedIn: br.linkedin.com/in/connectingmedia follow him on Twitter René_Schaap

The Broadcast Brazil Column will be written by Daniel Littwin

How can one maintain clarity in the primary point(s) of focus of a mix? Whether that focus is a news anchor, sports announcer, lead vocalist or instrumental ensemble, it is necessary to hear the main performer clearly and in proper perspective.

There are many tools available to audio engineers to ensure that this perspective is achieved:

- Microphone position

- Choice of microphone

- Isolation mounts for microphones

- Gain and balance throughout the signal path

- Selection of specific equipment:

- Solid state or tube mic preamps, transformer, transformerless, etc.

- Use of signal processing:

- Equalization, dynamics, effects devices, etc.

- Pan position and mix balance.

There are many mitigating factors and potential problems:

- How many sources are in the mix?

- How many of the sources are microphones?

- Do all the microphones share the same space/time perspective?

- Is this a live event or a multitrack/multilevel recording?

- Is there a substantial amount of background noise?

- How reverberant is the environment?

- Are there competing points of interest?

- Will this be reassembled in post-production or broadcast live or both?

- Are there multiple mixing desks in operation? i.e.: transmission, recording and PA

- What is the final destination of the product?

- Radio or TV Broadcast?

- Mono, stereo or surround?

- What are the parameters of the metadata at the transmitter?

- What are the parameters of the limiters at the transmitter?

- CD, DVD or Video Game?

- Is metadata being recorded?

- What parameters are specified?

- Internet streaming or MP3?

- What bit rate?

- What is the record media?

- Hard drive, solid state or tape-based digital media?

- Analog tape

- With or without noise reduction?

- What tape speed is being used?

These are some of the factors that must enter into an engineer’s calculations.

Distortion

It is extremely important to keep the signal path free of distortion, whether from mechanical noise, or from electronic overloads. Accurate monitoring, metering and close attention is required to prevent distortions from entering the signal path. A single distorted signal can affect other clean signals detrimentally. Distortion becomes additive as well, in that the net effect of two or more distorted signals is worse than one signal on its own. Small bits of distortion at the beginning of a microphone’s signal path have an increasingly destructive effect on the final mix output.

This could be likened to listening to a stereo system where one speaker is slightly distorted: the sound is unpleasant, yet the overall effect is somewhat diminished when the other speaker is functioning normally. If both speakers are distorted, the system is extremely unpleasant if not impossible to listen to. However, if even only one speaker is distorted it still detracts from the response of the other speaker and from the sound of the system as a whole.

The dynamic range of a given signal may be too large to be processed by recording equipment and the corresponding media. Even though modern digital equipment is capable of dynamic ranges exceeding 110dB, unexpected variations of levels from synthesizers, crowd reactions or heavily amplified and processed instruments can cause even the best quality analog-to-digital converters to distort. Careful input adjustment, and judicious use of compression and/or limiting will prevent distortion.

Digital Versus Analog Distortion

Analog distortion can actually be used as a desirable effect. Analog distortion bears an inverse resemblance to the original audio signal, in that as more distortion is added, the signal waveform becomes less recognizable. Digital distortion is undesirable, as it bears no resemblance to the original signal. Furthermore, digital distortion is absolute above a given threshold and non-existent below that threshold. This also means that in response to a sudden transient, a signal can be distorted and unusable once the dynamic range of the system is surpassed.

Tape saturation, valve saturation and output clipping are all types of compression, which can be quite pleasant if used carefully. Transformers can have an effect similar to equalization, slightly emphasizing or de-emphasizing certain frequencies, depending on many factors including the core density, number of windings and wire gauge used in the transformer. There are also many digital plug-ins that emulate analog processors.

They can all be effective and complimentary if used within the dynamic range of the system.

In any mix, every signal affects every other signal. Good mixes take advantage of this phenomenon to create a new complimentary sound out of the elements presented. Distorted waveforms will combine with non-distorted signals and make the individual components of the mix appear to be corrupted. Distortion will affect not only frequency response but also signal phase as well. Therefore distortion will lead to irregularities or even a collapse of the stereo or surround image, which is based on phase information.

Data Compression, Noise Reduction and Signal Encoding/Decoding

If data compression is used it is imperative that the correct codecs be implemented. Most often this is handled automatically. In some cases it is necessary to choose. If noise reduction is used it must be consistent throughout the reproduction chain. When mixing in surround it is extremely important to manage the metadata correctly, both while encoding and decoding.

Accurate documentation is very important in maintaining signal quality. Regardless of how the information is preserved; on labels, as PDF files accompanying the data files or even as BWAV file headers, an accurate description of any relevant data will prevent mismanagement that leads to bad audio reproduction. Any signal must be decoded in coherence to how it was encoded regardless of whether that encoding is noise reduction, data compression or metadata. Good documentation facilitates proper signal management. Accurately reproducing the audio from a commercial CD is easy. Dealing with thousands of undocumented files in a myriad of formats is extremely difficult. It is good to assume that the work you do will be used by someone who has no idea of your project’s history other than what your notes indicate.

Microphone Technique

The first place to ensure intelligibility is at the very beginning of the signal chain; the microphone. Position is of primary importance. Engineers must strike a balance between two physical characteristics of microphones.

- The Inverse Square Law. The closer a microphone is to a sound source the louder that source will be in relation to the background sound by an inverse square factor. At a distance of 20 cm a source will be 4 times louder than at a distance of 40 cm. (40÷20=2. 22=4) Sound that emanates from a point source drops in level 6 dB for every 2 units of distance. So a signal of 90dB at 20cm will be 84 dB at 40cm.

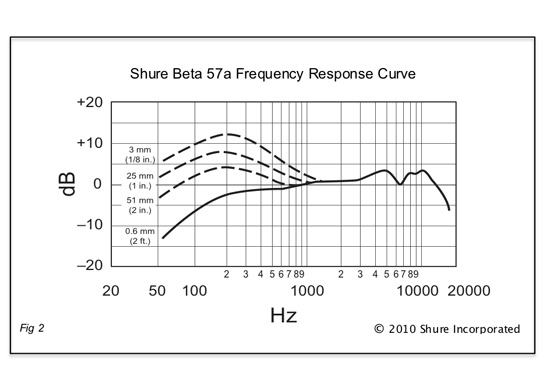

- All directional microphones are subject to the Proximity Effect, where the bass frequencies are increased in relation to the overall tonal balance. See Fig 2.

Therefore if a microphone is too far from a sound source, the source will be indistinguishable from the background noise. If a microphone is too close to a sound source it will suffer from hyperextended low frequency response, making the sound appear muffled. The challenge is to find the position where the microphone distinguishes the desired sound from the background yet does not over-enhance the low frequency components. For most dynamic cardioid mics like the Shure SM 57/58 or Beta 57/58 that distance is generally between 15 and 30 cm.

Frequency Response Curve

The proximity effect can be used to enhance the bass component of a voice. However at distances where the proximity effect becomes apparent a very small adjustment in distance will yield a very large difference in bass response. The proximity effect will also increase the apparent sensitivity to plosives. Certain consonants, especially “P” and “B”, will be over-accentuated.

Wind and vibration are other factors that will affect clarity:

If the microphone is placed in a windy environment or if the talent requires, use a windscreen.

To reduce unwanted sound due to vibration use isolation or shock mounts. Certain microphones are designed for handheld use. Others will always require isolation mounts.

Isolation mounts should be used for orchestras, ensembles, choirs, and sources that are captured from a distance. Large diaphragm microphones also should be used with shock mounts. It also should be noted that in general, the greater the directionality of a microphone the more sensitivity it has to handling and wind noise as well as to proximity effect.

Certain microphones are sensitive to diaphragm overloads. In such cases either move the microphone farther from the sound source or change the microphone. Even extremely durable microphones can develop rigidity in the diaphragm over time, which will make them prone to distortion, as they get older.

Microphone Position

Microphone position is of primary importance regardless of what kind of music or program is being presented. If a microphone is correctly positioned the sound of the instrument will be captured. If a microphone is incorrectly positioned even with a perfect signal chain the sound will be compromised.

Vocals

The human voice was the first musical instrument, so regardless of culture or category of music it is the instrument that bears the greatest scrutiny. As such, artists and producers are most apprehensive and preoccupied with the recording of vocals. Achieving a great vocal sound can be compromised by the performer’s insecurities and/or the producer’s concerns and preconceptions. Clarity in the vocal is objective; you can either hear it or you cannot. The tonal coloration however, is subject to taste, available equipment and how the vocal sound fits within the rest of the tracks (or vice versa) and the remainder of the entire production.

A very important issue for engineers while recording vocals is an overabundance of bass due to the proximity effect mentioned above. Many microphones are equipped with bass filters to ameliorate this problem. Of course when recording an operatic bass this sort of filter cannot be used and the audio operator must rely on positioning. A second common problem is distortion. Distortion is not necessarily obvious nor is it always visible on the meters. Careful listening on an accurate speaker or headphone system is required.

Instruments

Every instrument presents its own particular challenge. Every individual instrument family has general characteristics and individual instruments each have their own sonic personalities.

Some examples:

Woodwinds:

Most of the sound from a woodwind comes through the keys. The sonic character of the instrument, the harmonic overtones and also the fundamental note all radiate from the entire instrument through the keys. Only the fundamental tones and the lowest note emanate from the bell. Flutes are the exception with the majority of the sound coming from the mouthpiece.

Horns develop their sounds from the bell.

Strings form their sounds from the bridge of the instrument. In the case of the violin family, it is a good idea to aim the microphone at both the bridge and the sound post. For guitars, the sound originates from the bridge and radiates outward across the soundboard, or top of the instrument. The sound of the instrument does not emanate from the sound hole. The sound hole acts as a bass relief port similar to the bass port on a speaker.

One of the more interesting challenges is how to make a clean recording of a highly amplified instrument such as a distorted electric guitar. In general, one should be able hear the same sound at the amplifier as through the monitoring system. If the quality of sound remains constant regardless of the monitoring level, all should be well.

Mix balance

Assuming that all the signals are well recorded and free of distortion, clarity can be assured through both level and pan position in a mix.

Equalization is mostly used as a corrective measure. However, EQ can also be used to emphasize or deemphasize components of a mix: In a simple voice over background music mix, a trick used in American radio production is to equalize the music to deemphasize the midrange frequencies so that voice can be heard clearly while the level of the music is still apparent. A small dip (-1.5 - -4 dB) from approximately 500Hz - 3.000Hz in the music track will make an announcer’s voice much more present without sacrificing the level of the music. It is often easier and faster to apply a static process, such as equalization rather than a dynamic process such as ducking, though ducking does work very well and can be quite transparent if set up properly.

Some artists or producers prefer that the main voice or instrument be much louder than any of the other sources. In such cases it is still necessary to pay attention to clarity. A muffled voice will always sound like a muffled voice, even if it is the only thing in the mix.

Dynamics

Compressors, gates and duckers can all be used to enhance clarity of a source. Limiters are considered to be mostly safety devices and are used to prevent the overall level from exceeding a given value, i.e.: -3 dBFS , though they do alter the tonal quality of the signal and as such can be used to change the tonal coloration.

____________________

1 How do Woodwind Insruments Work? J. Wolfe University of New South Wales 1994

Compressors were originally developed to reduce the dynamic range of broadcast and recorded material so it would be coherent with technology available at the time. Transmitters have dynamic range limitations; if fed with a signal that is too loud, the transmitter will be damaged. If the signal is not loud enough then the station’s broadcast coverage area will be attenuated. Originally the audio signal was fed directly into the transmitter. The only level control was a skilled operator who worked with a script or a score to anticipate volume levels and keep the equipment operating at peak efficiency.

The compressor as we know it today has its origins in the CBS Laboratories in New York and Stamford, Connecticut. The Audimax, an automatic gain controller was introduced in 1959 and the Volumax, a peak limiter, was introduced in the 1960’s. Both units were solid state electronic devices. Previous dynamics devices were tube based and were extremely expensive.

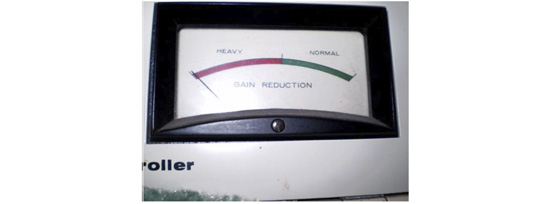

Fig 3

Fig 3 Fig 4

Fig 4

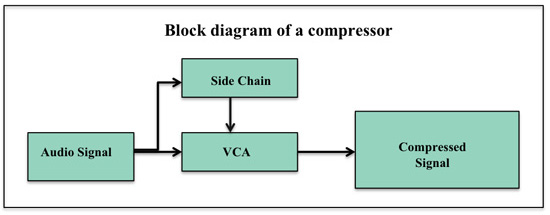

Dynamics processors function by using a control or side chain signal to determine the amount of process applied. This control signal is derived from the input or possibly from a parallel signal.

____________________

2 Decibels Relative to Full Scale

3 A short History of Transmission Audio Processing in the United States © 1992 Robert Orban

Fig 5

Compressors have the effect of accentuating whatever frequencies are most prevalent in the signal; Music tends to have more energy in the low frequency regions. Therefore over-compressing a mix will accentuate the low frequencies while masking the high frequencies, making the mix sound dull. However, this characteristic allows a compressor to be used as a de-emphasis device.

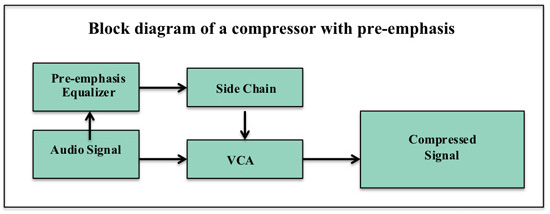

Fig 6

By exaggerating the frequencies associated with sibilance at the input to the side chain, the compressor will act on those frequencies and diminish the sibilance. By exaggerating those frequencies associated with plosives at the input to the side chain, the compressor will act on those frequencies and diminish them. Thus can a barrier to intelligibility be overcome. Over-processing can lead to listener fatigue, diminished comprehension and even distortion. An overabundance of any process, whether it is dynamics, equalization or effects can adversely affect the mix. It is absolutely preferable to make corrections early in the process; with the performance, with microphone choice and position, correct level management and so forth. This being so, there are many times when it is not possible to do so. In such cases equalization, dynamics and other processes can be applied as corrective measures. However, being aware of the optimum procedures can lessen the necessity for such corrective measure.

By carefully managing the available resources; talent or event, microphone selection and position, equipment and time, it is possible to maintain intelligibility in the components of any given mix.

Maintaining clarity requires a basic understanding of the physics of sound as applied to microphone technique, familiarity with the available equipment and in general, attention to detail.

Daniel Littwin , director New York Digital.

contact: daniel.nydigital@gmail.com

São Paulo, Brazil